Announcing the release of Spice v1.9.0-rc.2! 🌶

This is the second release candidate for v1.9.0, which introduces Spice Cayenne, a new high-performance data accelerator built on the Vortex columnar format that delivers better than DuckDB performance without single-file scaling limitations and a preview of Multi-Node Distributed Query based on Apache Ballista. v1.9.0-rc.2 also upgrades to DataFusion v50 and DuckDB v1.4.1 for even higher query performance, expands search capabilities with full-text search on views and multi-column embeddings, includes significant DynamoDB and DuckDB accelerator improvements, expands the HTTP data connector to support endpoints as tables, and delivers many security and reliability improvements.

Introducing Cayenne: SQL as an Acceleration Format: A new high-performance Data Accelerator that simplifies multi-file data acceleration by using an embedded database (SQLite) for metadata while storing data in the Vortex columnar format, a Linux Foundation project. Cayenne delivers query and ingestion performance better than DuckDB's file-based acceleration without DuckDB's memory overhead and the scaling challenges of single DuckDB files.

Cayenne uses SQLite to manage acceleration metadata (schemas, snapshots, statistics, file tracking) through simple SQL transactions, while storing data in Vortex's compressed columnar format. This architecture provides:

Key Features:

- SQLite + Vortex Architecture: All metadata is stored in SQLite tables with standard SQL transactions, while data lives in Vortex's compressed, chunked columnar format designed for zero-copy access and efficient scanning.

- Simplified Operations: No complex file hierarchies, no JSON/Avro metadata files, no separate catalog servers—just SQL tables and Vortex data files. The entire metadata schema is intentionally simple for maximum reliability.

- Fast Metadata Access: Single SQL query retrieves all metadata needed for query planning—no multiple round trips to storage, no S3 throttling, no reconstruction of metadata state from scattered files.

- Efficient Small Changes: Dramatically reduces small file proliferation. Snapshots are just rows in SQLite tables, not new files on disk. Supports millions of snapshots without performance degradation.

- High Concurrency: Changes consist of two steps: stage Vortex files (if any), then run a single SQL transaction. Much faster conflict resolution and support for many more concurrent updates than file-based formats.

- Advanced Data Lifecycle: Full ACID transactions, delete support, and retention SQL execution on refresh commit.

Example Spicepod.yml configuration:

datasets:

- from: s3:my_table

name: accelerated_data_30d

acceleration:

enabled: true

engine: cayenne

mode: file

refresh_mode: append

retention_sql: DELETE FROM accelerated_data WHERE created_at < NOW() - INTERVAL '30 days'

Note, the Cayenne Data Accelerator is in Beta with limitations.

For more details, refer to the Cayenne Documentation, the Vortex project, and the DuckLake announcement that partly inspired this design.

Apache Ballista Integration: Spice now supports distributed query execution based on Apache Ballista, enabling distributed queries across multiple executor nodes for improved performance on large datasets. This feature is in preview in v1.9.0-rc.2.

Architecture:

A distributed Spice cluster consists of:

- Scheduler: Responsible for distributed query planning and work queue management for the executor fleet

- Executors: One or more nodes responsible for running physical query plans

Getting Started:

Start a scheduler instance using an existing Spicepod. The scheduler is the only spiced instance that needs to be configured:

spiced --cluster-mode scheduler --flight 0.0.0.0:50051

Start one or more executors configured with the scheduler's flight URI:

spiced --cluster-mode executor --scheduler-url spiced://localhost:50051

Query Execution:

Queries run through the scheduler will now show a distributed_plan in EXPLAIN output, demonstrating how the query is distributed across executor nodes:

EXPLAIN SELECT count(id) FROM my_dataset;

Current Limitations:

- Accelerated datasets are currently not supported. This feature is designed for querying partitioned data lake formats (Parquet, Delta Lake, Iceberg, etc.)

- The feature is in preview and may have stability or performance limitations

- Specific acceleration support is planned for future releases

Spice.ai is built on the Apache DataFusion query engine. The v50 release brings significant performance improvements and enhanced reliability:

Performance Improvements 🚀:

-

Dynamic Filter Pushdown: Enhanced dynamic filter pushdown for custom ExecutionPlans, ensuring filters propagate correctly through all physical operators for improved query performance.

-

Partition Pruning: Expanded partition pruning support ensures that unnecessary partitions are skipped when filters are not used, reducing data scanning overhead and improving query execution times.

Apache Spark Compatible Functions: Added support for Spark-compatible functions including array, bit_get/bit_count, bitmap_count, crc32/sha1, date_add/date_sub, if, last_day, like/ilike, luhn_check, mod/pmod, next_day, parse_url, rint, and width_bucket.

Bug Fixes & Reliability: Resolved issues with partition name validation and empty execution plans when vector index lists are empty. Fixed timestamp support for partition expressions, enabling better partitioning for time-series data.

See the Apache DataFusion 50.0.0 Release for more details.

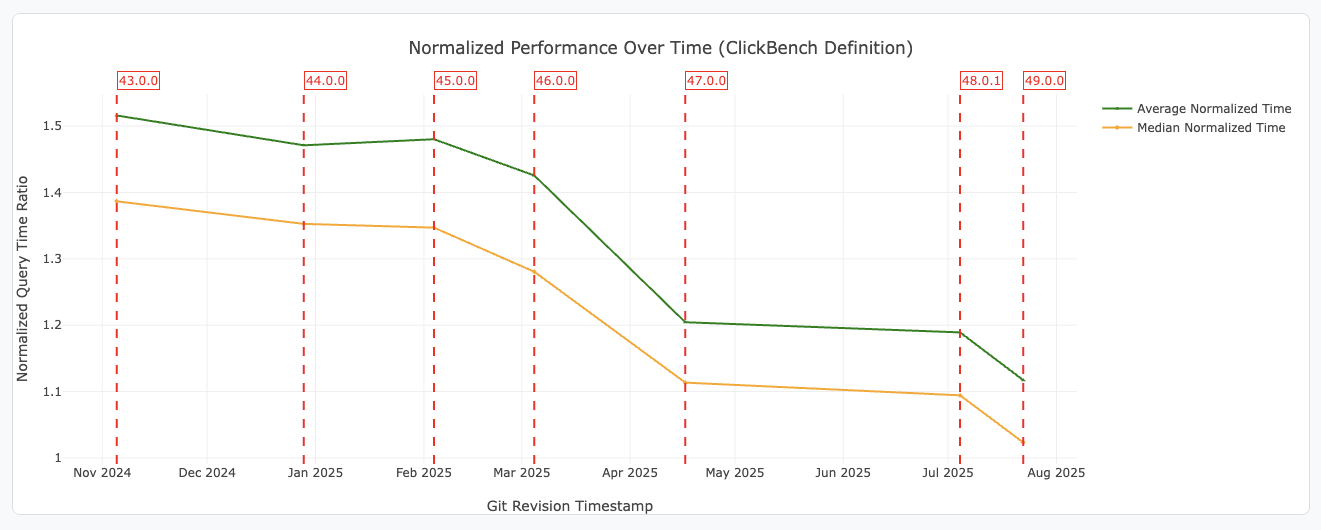

DuckDB v1.4.1: DuckDB has been upgraded to v1.4.1, which includes several performance optimizations.

Composite ART Index Support: DuckDB in Spice now supports composite (multi-column) Adaptive Radix Tree (ART) indexes for accelerated table scans. When queries filter on multiple columns fully covered by a composite index, the optimizer automatically uses index scans instead of full table scans, delivering significant performance improvements for selective queries.

Example configuration:

datasets:

- from: file://data.parquet

name: sales

acceleration:

enabled: true

engine: duckdb

indexes:

'(region, product_id)': enabled

Performance example with composite index on 7.5M rows:

SELECT * FROM sales WHERE region = 'US' AND product_id = 12345;

DuckDB Intermediate Materialization: Queries with indexes now use intermediate materialization (WITH ... AS MATERIALIZED) to leverage faster index scans. Currently supported for non-federated queries (query_federation: disabled) against a single table with indexes only. When predicates cover more columns than the index, the optimizer rewrites queries to first materialize index-filtered results, then apply remaining predicates. This optimization can deliver significant performance improvements for selective queries.

Example configuration:

datasets:

- from: file://sales_data.parquet

name: sales

acceleration:

enabled: true

engine: duckdb

mode: file

params:

query_federation: disabled

indexes:

'(region, product_id)': enabled

Performance example:

SELECT * FROM sales

WHERE region = 'US' AND product_id = 12345 AND amount > 1000;

The optimizer automatically rewrites the query to:

WITH _intermediate_materialize AS MATERIALIZED (

SELECT * FROM sales WHERE region = 'US' AND product_id = 12345

)

SELECT * FROM _intermediate_materialize WHERE amount > 1000;

Parquet Buffering for Partitioned Writes: DuckDB partitioned writes in table mode now support Parquet buffering, reducing memory usage and improving write performance for large datasets.

Retention SQL on Refresh Commit: DuckDB accelerations now support running retention SQL on refresh commit, enabling automatic data cleanup and lifecycle management during refresh operations.

UTC Timezone for DuckDB: DuckDB now uses UTC as the default timezone, ensuring consistent behavior for time-based queries across different environments.

Example Spicepod.yml configuration:

datasets:

- from: s3://my_bucket/large_table/

name: partitioned_data

acceleration:

enabled: true

engine: duckdb

mode: file

retention:

sql: DELETE FROM partitioned_data WHERE event_time < NOW() - INTERVAL '7 days'

-

Querying endpoints as tables: The HTTP/HTTPS Data Connectors now supports querying HTTP endpoints directly as tables in SQL queries with dynamic filters. This feature transforms REST APIs into queryable data sources, making it easy to integrate external service data.

-

Query HTTP endpoint that returns structured data (JSON, CSV, etc.) as if it were a database table

-

Configurable retry logic, timeouts, and POST request support for more complex API interactions

Example Spicepod.yml configuration:

datasets:

- from: https://api.tvmaze.com

name: tvmaze

params:

file_format: json

max_retries: 3

client_timeout: 10s

Example SQL query:

SELECT request_path, request_query, content

FROM tvmaze

WHERE request_path = '/search/people' and request_query = 'q=michael'

LIMIT 10;

If a request_body is supplied it will be posted to the endpoint:

Example SQL query:

SELECT request_path, request_query, content

FROM tvmaze

WHERE request_path = '/search/people' and request_query = 'q=michael' and request_body = '{"name": "michael"}'

LIMIT 10;

HTTP endpoints can be accelerated using refresh_sql:

datasets:

- from: https://api.tvmaze.com

name: tvmaze

acceleration:

enabled: true

refresh_mode: full

refresh_sql: |

SELECT request_path, request_query, content

FROM tvmaze

request_path = '/search/people'

AND request_query IN ('q=michael', 'q=luke')

Improved Query Performance: The DynamoDB Data Connector now includes improved filter handling for edge cases, parallel scan support for faster data ingestion, and better error handling for misconfigured queries. These improvements enable more reliable and performant access to DynamoDB data.

Example Spicepod.yml configuration:

datasets:

- from: dynamodb:my_table

name: ddb_data

params:

scan_segments: 10

Atomic Range Reads for Versioned Files: Spice now supports S3 Versioning for all connectors using object-store (S3, Delta Lake, etc.), ensuring range reads over versioned files are atomically correct. When S3 versioning is enabled, Spice automatically tracks version IDs during file discovery and uses them for all subsequent range reads, preventing inconsistencies from concurrent file modifications.

Current limitations:

- Multi-file connections (e.g., partitioned datasets) do not yet support version tracking across all files

- Version tracking is automatic when S3 versioning is enabled on the bucket

Full-Text Search on Views: Full-text search indexes are now supported on views, enabling advanced search scenarios over pre-aggregated or transformed data. This extends the power of Spice's search capabilities beyond base datasets.

Multi-Column Embeddings on Views: Views now support embedding columns, enabling vector search and semantic retrieval on view data. This is useful for search over aggregated or joined datasets.

Vector Engines on Views: Vector search engines are now available for views, enabling similarity search over complex queries and transformations.

Example Spicepod.yml configuration:

views:

- name: aggregated_reviews

sql: SELECT review_id, review_text FROM reviews WHERE rating > 4

embeddings:

- column: review_text

model: openai:text-embedding-3-small

Dedicated Query Thread Pool: Query execution and accelerated refreshes now run on their own dedicated thread pool, separate from the HTTP server. This prevents heavy query workloads from slowing down API responses, keeping health checks fast and avoiding unnecessary Kubernetes pod restarts under load.

This feature was opt-in in previous releases and is now enabled by default in v1.9.0-rc.2. To disable it and revert to the previous behavior, add the following spicepod.yaml configuration:

runtime:

params:

dedicated_thread_pool: none

Stale-While-Revalidate Cache Control: Query results now support "stale-while-revalidate" cache control, allowing stale cached data to be served immediately while asynchronously refreshing the cache entry in the background. This improves response times for frequently-accessed queries while maintaining data freshness. Requires cache key type to be set to "sql (raw)" for proper operation.

Optimized Prepared Statements: Prepared statement handling has been optimized for better performance with parameterized queries, reducing planning overhead and improving execution time for repeated queries.

Large RecordBatch Chunking: Large Arrow RecordBatch objects are now automatically chunked to control memory usage during query execution, preventing memory exhaustion for queries returning large result sets.

HTTP Cache-Control Support: The query result cache now supports the stale-while-revalidate Cache-Control directive, enabling faster response times by serving stale cached results immediately while asynchronously refreshing the cache in the background. This feature is particularly useful for applications that can tolerate slightly stale data in exchange for improved performance.

How it works:

When a cache entry is stale but within the stale-while-revalidate window, Spice will:

- Immediately return the stale cached result to the client

- Asynchronously re-execute the query in the background to refresh the cache

- Future requests will use the refreshed data

Configuration:

Use the Cache-Control HTTP header with the stale-while-revalidate directive:

Cache-Control: max-age=300, stale-while-revalidate=60

This configuration caches results for 5 minutes (300 seconds), and allows serving stale results for an additional 60 seconds while refreshing in the background.

Requirements:

- Must use plan or raw SQL cache keys (set

cache_key_type to sql or plan in results_caching configuration)

- Background revalidation re-executes queries through the normal query path

- Timestamp tracking automatically determines cache entry age for staleness checks

Example configuration via HTTP header:

GET /v1/sql

Cache-Control: max-age=600, stale-while-revalidate=120

X-Cache-Key-Type: sql

This feature improves application responsiveness while ensuring data freshness through background updates.

Enhanced HTTP Client Security: HTTP client usage across the runtime has been hardened with improved TLS validation, certificate pinning for critical endpoints, and better error handling for network failures.

ODBC Connector Improvements: Removed unwrap calls from the ODBC connector, improving error handling and reliability. Fixed secret handling and Kubernetes secret integration.

CLI Permissions Hardening: Tightened file permissions for the CLI and install script, ensuring secure defaults for configuration files and credentials.

Oracle Instant Client Pinning: Oracle Instant Client downloads are now pinned to specific SHAs, ensuring reproducible builds and preventing supply chain attacks.

Improved Credential Retry Logic: AWS SDK credential initialization has been significantly improved with more robust retry logic and better error handling. The system now automatically retries transient credential resolution failures using Fibonacci backoff, allowing Spice to tolerate extended AWS outages (up to ~48 hours) without manual intervention.

Key features:

- Automatic retry with backoff: Implements Fibonacci backoff for transient credential failures (network issues, temporary AWS service disruptions)

- Configurable retry limits: Supports up to 300 retry attempts with a maximum retry interval of 600 seconds

- Better error handling: Distinguishes between retryable errors (connector errors) and non-retryable errors (misconfiguration)

- Unauthenticated access support: Properly supports unauthenticated access to public S3 buckets without requiring credentials

- Improved error messages: Provides detailed logging with attempt numbers, retry intervals, and error context for better troubleshooting

The improvements ensure more reliable AWS service integration, particularly in environments with intermittent network connectivity or during AWS service degradations.

DataFusion Log Emission: The Spice runtime now emits DataFusion internal logs, providing deeper visibility into query planning and execution for debugging and performance analysis.

AI Completions Tracing: Fixed tracing so that ai_completions operations are correctly parented under sql_query traces, improving observability for AI-powered queries.

Version-Controlled Data Access: The new Git Data Connector (Alpha) enables querying datasets stored in Git repositories. This connector is ideal for use cases involving configuration files, documentation, or any data tracked in version control.

Example Spicepod.yml configuration:

datasets:

- from: git:https://github.com/myorg/myrepo

name: git_metrics

params:

file_format: csv

For more details, refer to the Git Data Connector Documentation.

The Spice Java SDK have been upgraded with support configurable Arrow memory limit: spice-java v0.4.0

SpiceClient client = SpiceClient.builder()

.withArrowMemoryLimitMB(1024) // 1GB limit

.build();

Install Specific Versions: The spice install command now supports installing specific versions of the Spice runtime and CLI. This enables easy version management, downgrading, or installation of specific releases for testing or compatibility requirements.

Usage:

# Install a specific version

spice install v1.8.3

# Install a specific version with AI flavor

spice install v1.8.3 ai

# Install latest version (existing behavior)

spice install

spice install ai

Note: Homebrew installations require manual version management via brew install spiceai/spiceai/spice@<version>.

Persistent Query History: The Spice CLI REPL (SQL, search, and chat interfaces) now persists command history to ~/.spice/query_history.txt, making your query history available across sessions. The history file is automatically created if it doesn't exist, with graceful fallback if the home directory cannot be determined.

New REPL Commands:

.clear - Clear the screen using ANSI escape codes for a clean workspace.clear history - Clear and persist the query history, removing all stored commands

Tab Completion: Tab completion now includes suggestions based on your command history, making it faster to re-run or modify previous queries.

Example usage:

sql> SELECT * FROM my_table;

sql> .clear # Clears the screen

sql> .clear history # Clears command history

sql> # Use arrow keys or tab to access previous commands

- Reliability: Fixed refresh worker panics with recovery handling to prevent runtime crashes during acceleration refreshes.

- Reliability: Improved error messages for missing or invalid

spicepod.yaml files, providing actionable feedback for misconfiguration.

- Reliability: Fixed DuckDB metadata pointer loading issues for snapshots.

- Performance: Ensured

ListingTable partitions are pruned correctly when filters are not used.

- Reliability: Fixed vector dimension determination for partitioned indexes.

- Search: Fixed casing issues in Reciprocal Rank Fusion (RRF) for hybrid search queries.

- Search: Fixed search field handling as metadata for chunked search indexes.

- Validation: Added timestamp support for partition expressions.

- Validation: Fixed

regexp_match function for DuckDB datasets.

- Validation: Fixed partition name validation for improved reliability.

No breaking changes.

New HTTP Data Connector Recipe: New recipe demonstrating how to query REST APIs and HTTP(s) endpoints. See HTTP Connector Recipe for details.

The Spice Cookbook includes 82 recipes to help you get started with Spice quickly and easily.

To upgrade to v1.9.0-rc.2, use one of the following methods:

CLI:

Homebrew:

brew upgrade spiceai/spiceai/spice

Docker:

Pull the spiceai/spiceai:1.9.0-rc.2 image:

docker pull spiceai/spiceai:1.9.0-rc.2

For available tags, see DockerHub.

Helm:

helm repo update

helm upgrade spiceai spiceai/spiceai

AWS Marketplace:

🎉 Spice is now available in the AWS Marketplace!

Source:

Source: