Spice v1.0.2 (Feb 4, 2025)

Announcing the release of Spice v1.0.2 🎓

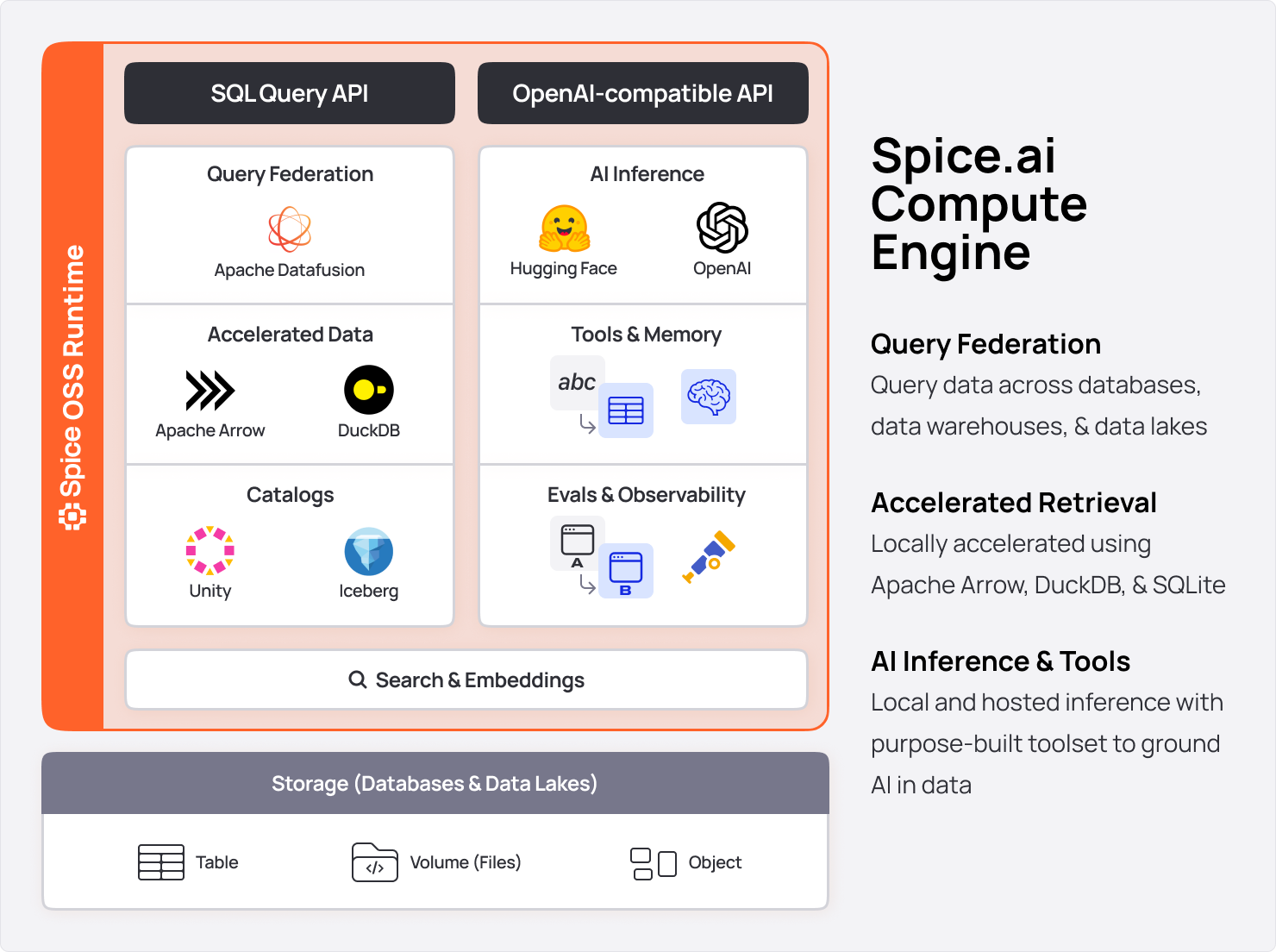

Spice v1.0.2 adds support for running local filesystem-hosted DeepSeek models including R1 (cloud-hosted via DeepSeek API was already supported) and improves the developer experience for debugging AI chat tasks along with several bug fixes. The HuggingFace and Filesystem-Hosted models providers have both graduated to Release Candidates (RC) and the Spice.ai Cloud Platform catalog provider has graduated to Beta.

Highlights in v1.0.2

-

spice trace New

spice traceCLI command that outputs a detailed breakdown of traces and tasks, including tool usage and AI completions.Examples:

trace> spice trace ai_chat

[61cc6bd0e571c783] ( 2593.77ms) ai_chat

├── [69362c30f238076f] ( 0.36ms) tool_use::get_readiness

├── [b6b17f1a9a6b86dc] ( 982.21ms) ai_completion

├── [c30d692c6c41c5ee] ( 0.06ms) tool_use::list_datasets

└── [ce18756d5fef0df0] ( 1605.12ms) ai_completion

trace> spice trace ai_chat --trace-id 61cc6bd0e571c783

trace> spice trace ai_chat --id chatcmpl-AvXwmPSV1PMyGBi9dLfkEQTZPjhqzThe

spice traceCLI simply outputs data available in theruntime.task_historytable which can also be queried by SQL.To learn more, see:

-

Filesystem-Hosted Models Provider: Graduated to Release Candidate (RC). To learn more, see the Filesystem-Hosted Models Provider Documentation.

-

HuggingFace Models Provider: Graduated to Release Candidate (RC). To learn more, see the HuggingFace Models Provider Documentation.

-

Spice.ai Cloud Platform Catalog: Graduated to Beta.

Contributors

- @phillipleblanc

- @johnnynunez

- @Sevenannn

- @sgrebnov

- @peasee

- @Jeadie

- @lukekim

New Contributors

- @johnnynunez made their first contribution in github.com/spiceai/spiceai/pull/4502

Breaking Changes

No breaking changes.

Cookbook Updates

Upgrading

To upgrade to v1.0.2, use one of the following methods:

CLI:

spice upgrade

Homebrew:

brew upgrade spiceai/spiceai/spice

Docker:

Pull the spiceai/spiceai:1.0.2 image:

docker pull spiceai/spiceai:1.0.2

For available tags, see DockerHub.

Helm:

helm repo update

helm upgrade spiceai spiceai/spiceai

What's Changed

Dependencies

No major dependency changes.

Changelog

- Update release branch naming by @phillipleblanc in https://github.com/spiceai/spiceai/pull/4539

- ready for arm buildings by @johnnynunez in https://github.com/spiceai/spiceai/pull/4502

- Bump helm chart version to 1.0.1 by @Sevenannn in https://github.com/spiceai/spiceai/pull/4542

- Include 1.0.1 as supported version in security.md by @Sevenannn in https://github.com/spiceai/spiceai/pull/4545

- Update CI to build on hosted windows runners by @phillipleblanc in https://github.com/spiceai/spiceai/pull/4540

- docs: Update Windows install by @peasee in https://github.com/spiceai/spiceai/pull/4551

- Fix spark spicepod for test operator by @Sevenannn in https://github.com/spiceai/spiceai/pull/4555

- Improve hugging face model chat error by @Sevenannn in https://github.com/spiceai/spiceai/pull/4554

- fix: Update Windows E2E install by @peasee in https://github.com/spiceai/spiceai/pull/4557

- feat: Add Spice Cloud Catalog Spicepod, release Alpha by @peasee in https://github.com/spiceai/spiceai/pull/4561

- Fix huggingface embedding errors by @Sevenannn in https://github.com/spiceai/spiceai/pull/4558

- feat: Load table schemas through REST for Spice Cloud Catalog by @peasee in https://github.com/spiceai/spiceai/pull/4563

- Add upgrade instruction in release note by @Sevenannn in https://github.com/spiceai/spiceai/pull/4548

- Add federated source information to refresh errors by @sgrebnov in https://github.com/spiceai/spiceai/pull/4560

- docs: Update ROADMAP.md by @peasee in https://github.com/spiceai/spiceai/pull/4566

- Merge mistral upstream by @Jeadie in https://github.com/spiceai/spiceai/pull/4562

- Fix windows build by @Sevenannn in https://github.com/spiceai/spiceai/pull/4574

- feat: Update Spice Cloud Catalog errors, release as Beta by @peasee in https://github.com/spiceai/spiceai/pull/4575

- docs: Add TOC to README.md by @peasee in https://github.com/spiceai/spiceai/pull/4538

- Updates to spiceai/mistral.rs by @Jeadie in https://github.com/spiceai/spiceai/pull/4580

- Improve refresh error tracing by @sgrebnov in https://github.com/spiceai/spiceai/pull/4576

- Add HTTP consistency & overhead to testoperator dispatch tool by @Jeadie in https://github.com/spiceai/spiceai/pull/4556

- Fix append mode refresh with MySQL Data Connector by @sgrebnov in https://github.com/spiceai/spiceai/pull/4583

- fix: Retry flaky tests by @peasee in https://github.com/spiceai/spiceai/pull/4577

- Fix E2E models test build on macOS runners by @sgrebnov in https://github.com/spiceai/spiceai/pull/4585

- spice trace chat support in CLI by @Jeadie in https://github.com/spiceai/spiceai/pull/4582

- Include hf test specs, enable ready_wait in workflow by @Sevenannn in https://github.com/spiceai/spiceai/pull/4584

- Add paths verification when loading models by @sgrebnov in https://github.com/spiceai/spiceai/pull/4591

- Add generation_config.json support for Filesystem models by @sgrebnov in https://github.com/spiceai/spiceai/pull/4592

- Promote Filesystem model provider to RC by @sgrebnov in https://github.com/spiceai/spiceai/pull/4593

- docs: Add models grading criteria by @peasee in https://github.com/spiceai/spiceai/pull/4550

- Fix typo in Alpha Release Criteria (models) by @sgrebnov in https://github.com/spiceai/spiceai/pull/4588

- fix: Retry AI integration tests by @peasee in https://github.com/spiceai/spiceai/pull/4595

- Run LLM integration tests on Macs; add running local models by @Jeadie in https://github.com/spiceai/spiceai/pull/4495

- Update version to 1.0.2 by @sgrebnov in https://github.com/spiceai/spiceai/pull/4594

- feat: Schedule testoperator by @peasee in https://github.com/spiceai/spiceai/pull/4503

- Improve UX of downloading GGUF from HF by @Jeadie in https://github.com/spiceai/spiceai/pull/4601

- Improve spice trace CLI command by @sgrebnov https://github.com/spiceai/spiceai/pull/4629

- Improve the UX of using huggingface models & embeddings by @phillipleblanc in https://github.com/spiceai/spiceai/pull/4623

- GGUF, hide metadata by @Jeadie in https://github.com/spiceai/spiceai/pull/4631

- Promote hugging face to rc by @Sevenannn in https://github.com/spiceai/spiceai/pull/4626

- Endgame Issue template improvements by @lukekim in https://github.com/spiceai/spiceai/pull/4647

- feat: setup sccache for PR checks by @peasee in https://github.com/spiceai/spiceai/pull/4652

- Run build_and_release_cuda.yml when crates/llms/Cargo.toml changes by @Jeadie in https://github.com/spiceai/spiceai/pull/4648

- Update E2E installation tests to match model runtime version by @sgrebnov in https://github.com/spiceai/spiceai/pull/4653

- fix: Postgres LargeUtf8 is equal to Utf8 by @peasee in https://github.com/spiceai/spiceai/pull/4664

- Fix eager string formatting in mistral.rs by @Jeadie in https://github.com/spiceai/spiceai/pull/4665

- Better error for spicepod parsing by @Sevenannn in https://github.com/spiceai/spiceai/pull/4632

- Update datafusion-table-providers (MySQL improvements) by @sgrebnov in https://github.com/spiceai/spiceai/pull/4670

- Handle delta tables partitioned by a date column with large date values by @phillipleblanc in https://github.com/spiceai/spiceai/pull/4672

**Full Changelog**: https://github.com/spiceai/spiceai/compare/v1.0.1...v1.0.2

Resources

Community

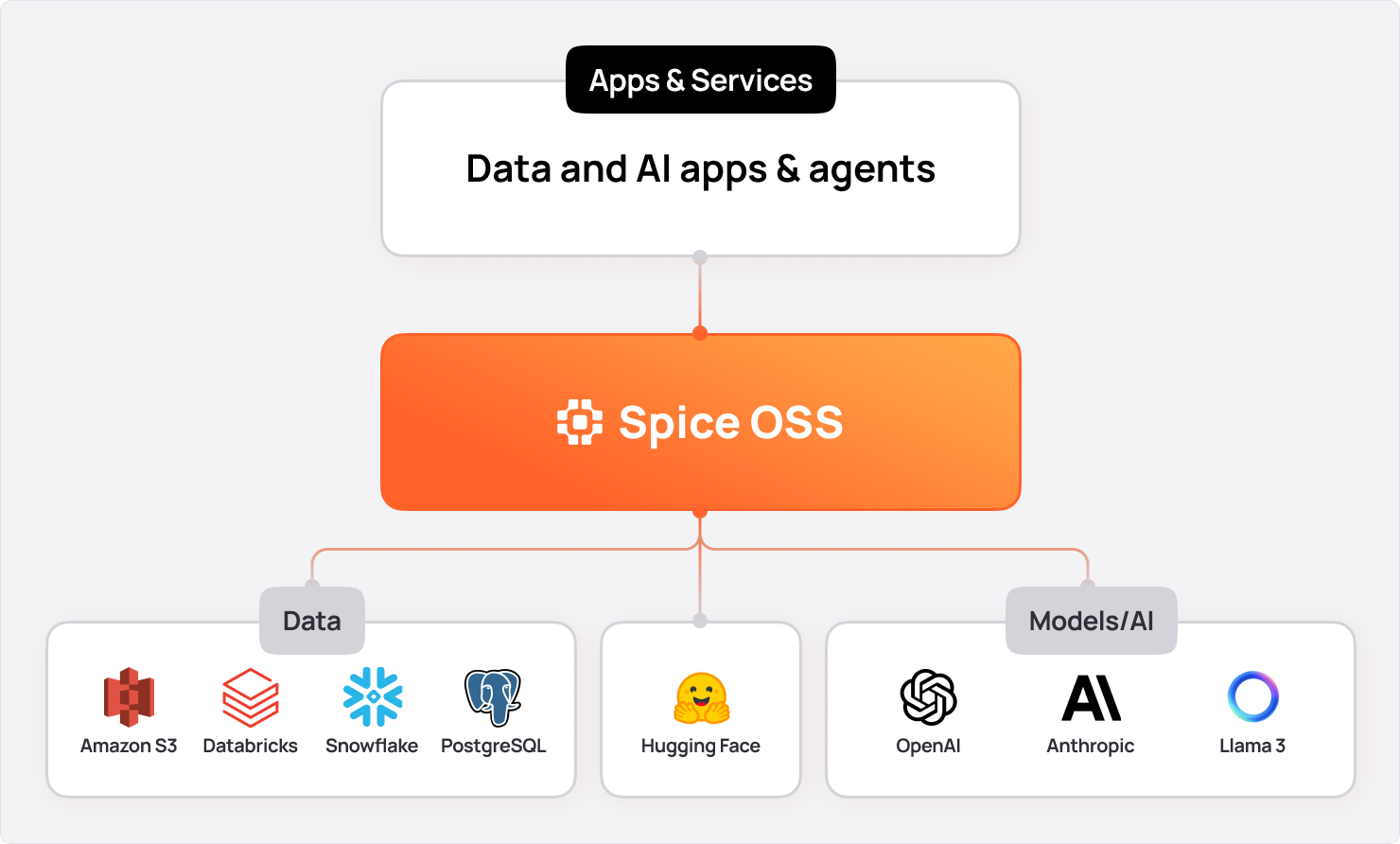

Spice.ai started with the vision to make AI easy for developers. We are building Spice.ai in the open and with the community. Reach out on Slack or by email to get involved.

- Twitter: @spice_ai

- Slack: spiceai.org/slack

- Telegram: Spice AI Discussion

- Reddit: https://www.reddit.com/r/spiceai

- Email: [email protected]