Announcing the release of Spice v1.4.0! ⚡

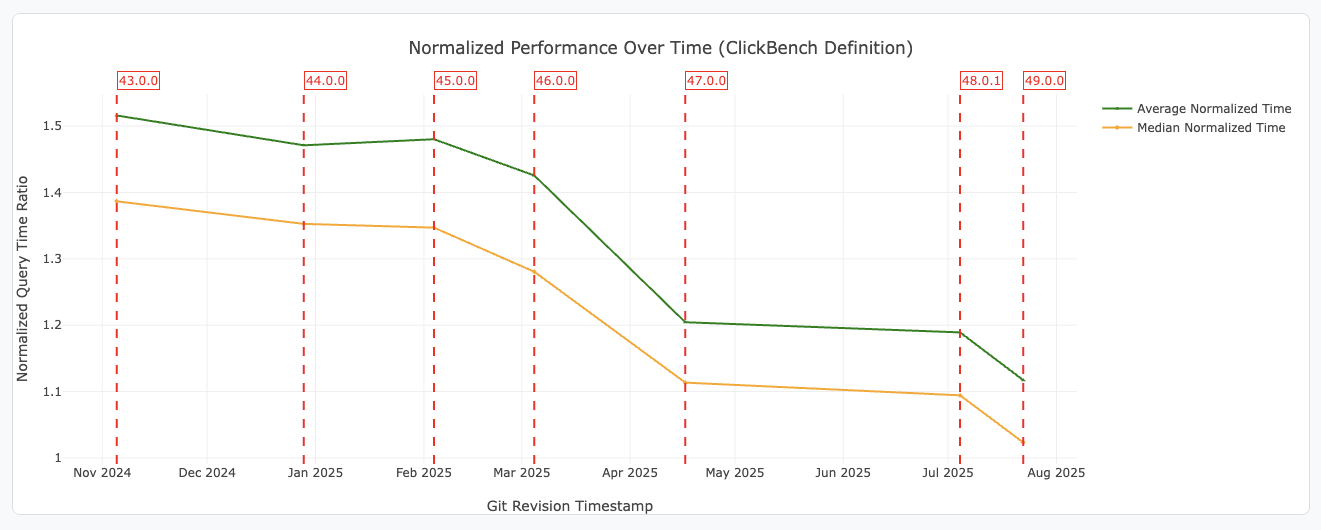

This release upgrades DataFusion to v47 and Arrow to v55 for faster queries, more efficient Parquet/CSV handling, and improved reliability. It introduces the AWS Glue Catalog and Data Connectors for native access to Glue-managed data on S3, and adds support for Databricks U2M OAuth for secure Databricks user authentication.

New Cron-based dataset refreshes and worker schedules enable automated task management, while dataset and search results caching improvements further optimizes query, search, and RAG performance.

Spice.ai is built on the DataFusion query engine. The v47 release brings:

Performance Improvements 🚀: This release delivers major query speedups through specialized GroupsAccumulator implementations for first_value, last_value, and min/max on Duration types, eliminating unnecessary sorting and computation. TopK operations are now up to 10x faster thanks to early exit optimizations, while sort performance is further enhanced by reusing row converters, removing redundant clones, and optimizing sort-preserving merge streams. Logical operations benefit from short-circuit evaluation for AND/OR, reducing overhead, and additional enhancements address high latency from sequential metadata fetching, improve int/string comparison efficiency, and simplify logical expressions for better execution.

Bug Fixes & Compatibility Improvements 🛠️: The release addresses issues with external sort, aggregation, and window functions, improves handling of NULL values and type casting in arrays and binary operations, and corrects problems with complex joins and nested window expressions. It also addresses SQL unparsing for subqueries, aliases, and UNION BY NAME.

See the Apache DataFusion 47.0.0 Changelog for details.

Arrow v55 delivers faster Parquet gzip compression, improved array concatenation, and better support for large files (4GB+) and modular encryption. Parquet metadata reads are now more efficient, with support for range requests and enhanced compatibility for INT96 timestamps and timezones. CSV parsing is more robust, with clearer error messages. These updates boost performance, compatibility, and reliability.

See the Arrow 55.0.0 Changelog and Arrow 55.1.0 Changelog for details.

Search Result Caching: Spice now supports runtime caching for search results, improving performance for subsequent searches and chat completion requests that use the document_similarity LLM tool. Caching is configurable with options like maximum size, item TTL, eviction policy, and hashing algorithm.

Example spicepod.yml configuration:

runtime:

caching:

search_results:

enabled: true

max_size: 128mb

item_ttl: 5s

eviction_policy: lru

hashing_algorithm: siphash

For more information, refer to the Caching documentation.

AWS Glue Catalog Connector Alpha: Connect to AWS Glue Data Catalogs to query Iceberg, Parquet, or CSV tables in S3.

Example spicepod.yml configuration:

catalogs:

- from: glue

name: my_glue_catalog

params:

glue_key: <your-access-key-id>

glue_secret: <your-secret-access-key>

glue_region: <your-region>

include:

- 'testdb.hive_*'

- 'testdb.iceberg_*'

sql> show tables;

+

| table_catalog | table_schema | table_name | table_type |

+

| my_glue_catalog | testdb | hive_table_001 | BASE TABLE |

| my_glue_catalog | testdb | iceberg_table_001 | BASE TABLE |

| spice | runtime | task_history | BASE TABLE |

+

For more information, refer to the Glue Catalog Connector documentation.

AWS Glue Data Connector Alpha: Connect to specific tables in AWS Glue Data Catalogs to query Iceberg, Parquet, or CSV in S3.

Example spicepod.yml configuration:

datasets:

- from: glue:my_database.my_table

name: my_table

params:

glue_auth: key

glue_region: us-east-1

glue_key: ${secrets:AWS_ACCESS_KEY_ID}

glue_secret: ${secrets:AWS_SECRET_ACCESS_KEY}

For more information, refer to the Glue Data Connector documentation.

Databricks U2M OAuth: Spice now supports User-to-Machine (U2M) authentication for Databricks when called with a compatible client, such as the Spice Cloud Platform.

datasets:

- from: databricks:spiceai_sandbox.default.messages

name: messages

params:

databricks_endpoint: ${secrets:DATABRICKS_ENDPOINT}

databricks_cluster_id: ${secrets:DATABRICKS_CLUSTER_ID}

databricks_client_id: ${secrets:DATABRICKS_CLIENT_ID}

Dataset Refresh Schedules: Accelerated datasets now support a refresh_cron parameter, automatically refreshing the dataset on a defined cron schedule. Cron scheduled refreshes respect the global dataset_refresh_parallelism parameter.

Example spicepod.yml configuration:

datasets:

- name: my_dataset

from: s3://my-bucket/my_file.parquet

acceleration:

refresh_cron: 0 0 * * *

For more information, refer to the Dataset Refresh Schedules documentation.

Worker Execution Schedules: Workers now support a cron parameter and will execute an LLM-prompt or SQL query automatically on the defined cron schedule, in conjunction with a provided params.prompt.

Example spicepod.yml configuration:

workers:

- name: email_reporter

models:

- from: gpt-4o

params:

prompt: 'Inspect the latest emails, and generate a summary report for them. Post the summary report to the connected Teams channel'

cron: 0 2 * * *

For more information, refer to the Worker Execution Schedules documentation.

SQL Worker Actions: Spice now supports workers with sql actions for automated SQL query execution on a cron schedule:

workers:

- name: my_worker

cron: 0 * * * *

sql: 'SELECT * FROM lineitem'

For more information, refer to the Workers with a SQL action documentation;

The Spice Cookbook now includes 70 recipes to help you get started with Spice quickly and easily.

To upgrade to v1.4.0, use one of the following methods:

CLI:

Homebrew:

brew upgrade spiceai/spiceai/spice

Docker:

Pull the spiceai/spiceai:1.4.0 image:

docker pull spiceai/spiceai:1.4.0

For available tags, see DockerHub.

Helm:

helm repo update

helm upgrade spiceai spiceai/spiceai

- Update trunk to 1.4.0-unstable (#5878) by @phillipleblanc in #5878

- Update openapi.json (#5885) by @app/github-actions in #5885

- feat: Testoperator reports benchmark failure summary (#5889) by @peasee in #5889

- fix: Publish binaries to dev when platform option is all (#5905) by @peasee in #5905

- feat: Print dispatch current test count of total (#5906) by @peasee in #5906

- Include multiple duckdb files acceleration scenarios into testoperator dispatch (#5913) by @sgrebnov in #5913

- feat: Support building testoperator on dev (#5915) by @peasee in #5915

- Update spicepod.schema.json (#5927) by @app/github-actions in #5927

- Update ROADMAP & SECURITY for 1.3.0 (#5926) by @phillipleblanc in #5926

- docs: Update qa_analytics.csv (#5928) by @peasee in #5928

- fix: Properly publish binaries to dev on push (#5931) by @peasee in #5931

- Load request context extensions on every flight incoming call (#5916) by @ewgenius in #5916

- Fix deferred loading for datasets with embeddings (#5932) by @ewgenius in #5932

- Schedule AI benchmarks to run every Mon and Thu evening PST (#5940) by @sgrebnov in #5940

- Fix explain plan snapshots for TPCDS queries Q36, Q70 & Q86 not being deterministic after DF 46 upgrade (#5942) by @phillipleblanc in #5942

- chore: Upgrade to Rust 1.86 (#5945) by @peasee in #5945

- Standardise HTTP settings across CLI (#5769) by @Jeadie in #5769

- Fix deferred flag for Databricks SQL warehouse mode (#5958) by @ewgenius in #5958

- Add deferred catalog loading (#5950) by @ewgenius in #5950

- Refactor deferred_load using ComponentInitialization enum for better clarity (#5961) by @ewgenius in #5961

- Post-release housekeeping (#5964) by @phillipleblanc in #5964

- add LTO for release builds (#5709) by @kczimm in #5709

- Fix dependabot/192 (#5976) by @Jeadie in #5976

- Fix Test-to-SQL benchmark scheduled run (#5977) by @sgrebnov in #5977

- Fix JSON to ScalarValue type conversion to match DataFusion behavior (#5979) by @sgrebnov in #5979

- Add v1.3.1 release notes (#5978) by @lukekim in #5978

- Regenerate nightly build workflow (#5995) by @ewgenius in #5995

- Fix DataFusion dependency loading in Databricks request context extension (#5987) by @ewgenius in #5987

- Update spicepod.schema.json (#6000) by @app/github-actions in #6000

- feat: Run MySQL SF100 on dev runners (#5986) by @peasee in #5986

- fix: Remove caching RwLock (#6001) by @peasee in #6001

- 1.3.1 Post-release housekeeping (#6002) by @phillipleblanc in #6002

- feat: Add initial scheduler crate (#5923) by @peasee in #5923

- fix flight request context scope (#6004) by @ewgenius in #6004

- fix: Ensure snapshots on different scale factors are retained (#6009) by @peasee in #6009

- fix: Allow dev runners in dispatch files (#6011) by @peasee in #6011

- refactor: Deprecate results_cache for caching.sql_results (#6008) by @peasee in #6008

- Fix models benchmark results reporting (#6013) by @sgrebnov in #6013

- fix: Run PR checks for tools/ changes (#6014) by @peasee in #6014

- feat: Add a CronRequestChannel for

scheduler (#6005) by @peasee in #6005

- feat: Add refresh_cron acceleration parameter, start scheduler on table load (#6016) by @peasee in #6016

- Update license check to allow dual license crates (#6021) by @sgrebnov in #6021

- Initial worker concept (#5973) by @Jeadie in #5973

- Don't fail if cargo-deny already installed (license check) (#6023) by @sgrebnov in #6023

- Upgrade to DataFusion 47 and Arrow 55 (#5966) by @sgrebnov in #5966

- Read Iceberg tables from Glue Catalog Connector (#5965) by @kczimm in #5965

- Handle multiple highlights in v1/search UX (#5963) by @Jeadie in #5963

- feat: Add cron scheduler configurations for workers (#6033) by @peasee in #6033

- feat: Add search cache configuration and results wrapper (#6020) by @peasee in #6020

- Fix GitHub Actions Ubuntu for more workflows (#6040) by @phillipleblanc in #6040

- Fix Actions for testoperator dispatch manual (#6042) by @phillipleblanc in #6042

- refactor: Remove worker type (#6039) by @peasee in #6039

- feat: Support cron dataset refreshes (#6037) by @peasee in #6037

- Upgrade datafusion-federation to 0.4.2 (#6022) by @phillipleblanc in #6022

- Define SearchPipeline and use in

runtime/vector_search.rs. (#6044) by @Jeadie in #6044

- fix: Scheduler test when scheduler is running (#6051) by @peasee in #6051

- doc: Spice Cloud Connector Limitation (#6035) by @Sevenannn in #6035

- Add support for on_conflict:upsert for Arrow MemTable (#6059) by @sgrebnov in #6059

- Enhance Arrow Flight DoPut operation tracing (#6053) by @sgrebnov in #6053

- Update openapi.json (#6032) by @app/github-actions in #6032

- Add tools enabled to MCP server capabilities (#6060) by @Jeadie in #6060

- Upgrade to delta_kernel 0.11 (#6045) by @phillipleblanc in #6045

- refactor: Replace refresh oneshot with notify (#6050) by @peasee in #6050

- Enable Upsert OnConflictBehavior for runtime.task_history table (#6068) by @sgrebnov in #6068

- feat: Add a workers integration test (#6069) by @peasee in #6069

- Fix DuckDB acceleration

ORDER BY rand() and ORDER BY NULL (#6071) by @phillipleblanc in #6071

- Update Models Benchmarks to report unsuccessful evals as errors (#6070) by @sgrebnov in #6070

- Revert: fix: Use HTTPS ubuntu sources (#6082) by @Sevenannn in #6082

- Add initial support for Spice Cloud Platform management (#6089) by @sgrebnov in #6089

- Run spiceai cloud connector TPC tests using spice dev apps (#6049) by @Sevenannn in #6049

- feat: Add SQL worker action (#6093) by @peasee in #6093

- Post-release housekeeping (#6097) by @phillipleblanc in #6097

- Fix search bench (#6091) by @Jeadie in #6091

- fix: Update benchmark snapshots (#6094) by @app/github-actions in #6094

- fix: Update benchmark snapshots (#6095) by @app/github-actions in #6095

- Glue catalog connector for hive style parquet (#6054) by @kczimm in #6054

- Update openapi.json (#6100) by @app/github-actions in #6100

- Improve Flight Client DoPut / Publish error handling (#6105) by @sgrebnov in #6105

- Define

PostApplyCandidateGeneration to handle all filters & projections. (#6096) by @Jeadie in #6096

- refactor: Update the tracing task names for scheduled tasks (#6101) by @peasee in #6101

- task: Switch GH runners in PR and testoperator (#6052) by @peasee in #6052

- feat: Connect search caching for HTTP and tools (#6108) by @peasee in #6108

- test: Add multi-dataset cron test (#6102) by @peasee in #6102

- Sanitize the ListingTableURL (#6110) by @phillipleblanc in #6110

- Avoid partial writes by FlightTableWriter (#6104) by @sgrebnov in #6104

- fix: Update the TPCDS postgres acceleration indexes (#6111) by @peasee in #6111

- Make Glue Catalog refreshable (#6103) by @kczimm in #6103

- Refactor Glue catalog to use a new Glue data connector (#6125) by @kczimm in #6125

- Emit retry error on flight transient connection failure (#6123) by @Sevenannn in #6123

- Update Flight DoPut implementation to send single final PutResult (#6124) by @sgrebnov in #6124

- feat: Add metrics for search results cache (#6129) by @peasee in #6129

- update MCP crate (#6130) by @Jeadie in #6130

- feat: Add search cache status header, respect cache control (#6131) by @peasee in #6131

- fix: Allow specifying individual caching blocks (#6133) by @peasee in #6133

- Update openapi.json (#6132) by @app/github-actions in #6132

- Add CSV support to Glue data connector (#6138) by @kczimm in #6138

- Update Spice Cloud Platform management UX (#6140) by @sgrebnov in #6140

- Add TPCH bench for Glue catalog (#6055) by @kczimm in #6055

- Enforce max_tokens_per_request limit in OpenAI embedding logic (#6144) by @sgrebnov in #6144

- Enable Spice Cloud Control Plane connect (management) for FinanceBench (#6147) by @sgrebnov in #6147

- Add integration test for Spice Cloud Platform management (#6150) by @sgrebnov in #6150

- fix: Invalidate search cache on refresh (#6137) by @peasee in #6137

- fix: Prevent registering cron schedule with change stream accelerations (#6152) by @peasee in #6152

- test: Add an append cron integration test (#6151) by @peasee in #6151

- fix: Cache search results with no-cache directive (#6155) by @peasee in #6155

- fix: Glue catalog dispatch runner type (#6157) by @peasee in #6157

- Fix: Glue S3 location for directories and Iceberg credentials (#6174) by @kczimm in #6174

- Support multiple columns in FTS (#6156) by @Jeadie in #6156

- fix: Add --cache-control flag for search CLI (#6158) by @peasee in #6158

- Add Glue data connector tpch bench test for parquet and csv (#6170) by @kczimm in #6170

- fix: Apply results cache deprecation correctly (#6177) by @peasee in #6177

- Fix regression in Parquet pushdown (#6178) by @phillipleblanc in #6178

- Fix CUDA build (use candle-core 0.8.4 and cudarc v0.12) (#6181) by @sgrebnov in #6181

- return empty stream if no external_links present (#6192) by @kczimm in #6192

- Use arrow pretty print util instead of init dataframe / logical plan in

display_records (#6191) by @Sevenannn in #6191

- task: Enable additional TPCDS test scenarios in dispatcher (#6160) by @peasee in #6160

- chore: Update dependencies (#6196) by @peasee in #6196

- Fix FlightSQL GetDbSchemas and GetTables schemas to fully match the protocol (#6197) by @sgrebnov in #6197

- Use spice-rs in test operator and retry on connection reset error (#6136) by @Sevenannn in #6136

- Fix load status metric description (#6219) by @phillipleblanc in #6219

- Run extended tests on PRs against release branch, update glue_iceberg_integration_test_catalog test (#6204) by @Sevenannn in #6204

- query schema for is_nullable (#6229) by @kczimm in #6229

- fix: use the query error message when queries fail (#6228) by @kczimm in #6228

- fix glue iceberg catalog integration test (#6249) by @Sevenannn in #6249

- cache table providers in glue catalog (#6252) by @kczimm in #6252

- fix: databricks sql_warehouse schema contains duplicate fields (#6255) by @phillipleblanc in #6255

Full Changelog: v1.3.2...v1.4.0

Source:

Source: